The Doctor Is Still the Boss in the AI Era

Date

April 20, 2023

Date

April 20, 2023

Medical providers featured in this article

In Brief

{{cta-block}}

Clinicians should always maintain liability for their tools, intelligent or not.

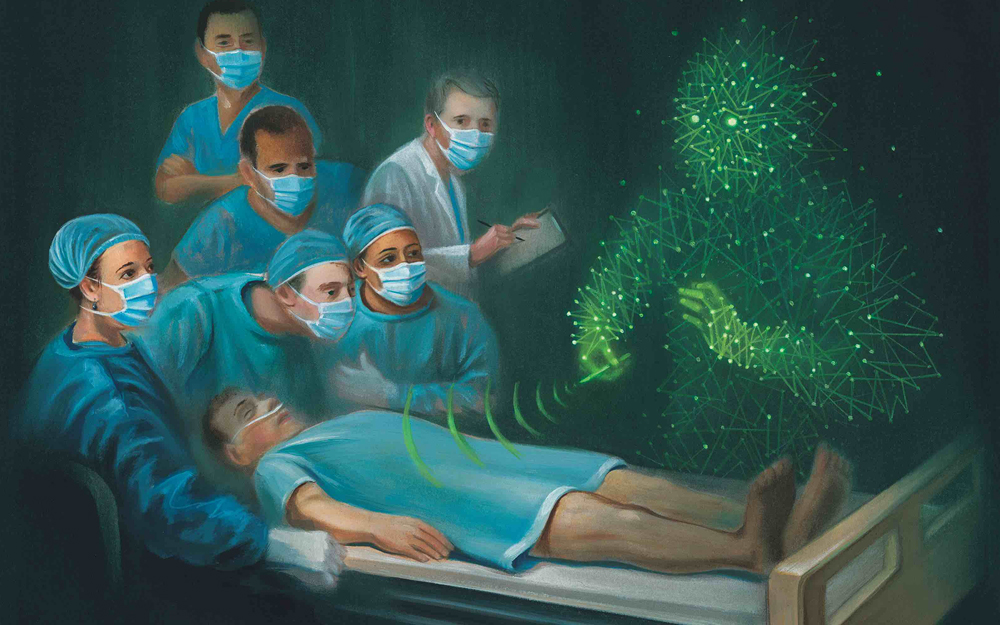

In medicine, every clinical decision at each juncture in a patient’s medical journey carries consequence—for everyone involved. And the consequences can be colossal when life and health are in play.

When physicians enlist the help of artificial intelligence (AI) to identify patients for clinical trials, flag emergent imaging scans and select medications based on a person’s individual profile, the stakes are high. But the burden of getting it right belongs solely to the people who create and use AI algorithms, says Virginia Bartlett, PhD, assistant director of the Center for Healthcare Ethics at Cedars-Sinai.

"AI is a tool—it is not its own entity," she says. "Both the way it is made and used are still human responsibilities."

AI’s intelligence is derived from human intelligence, and its design is based on the ways we make sense of the world. If we start to see problems in the AI programs, it’s a chance to reflect inward. It gives us two opportunities: change the AI, and change our practices that reflect it."

– Virginia Bartlett, PhD

As healthcare systems integrate AI into workflows and decision-making, providers are ethically bound to uphold transparency, fairness, harmlessness, responsibility and privacy, according to a global survey of attitudes published in Nature Machine Intelligence.

First and foremost, Dr. Bartlett says, developers should scrutinize systems to ensure they don’t perpetuate biases introduced in their creation. When consulting AI in formulating treatments, physicians should pay most attention to the patients in front of them, in the flesh. Finally, researchers, clinicians and patients alike should seize the opportunity to voice their worries and questions about AI—which often reflect entrenched fears about technology and the ways we interact with illness and healing.

When a physician is tasked with determining, for example, a course of cancer treatment based on an individual’s condition and genetics, AI can offer informed risk-stratification guidance. But a treatment plan formulated by an algorithmic calculation alone may stoke fears about access and discrimination. Physicians must continue to learn and prioritize their patients’ values, goals and preferences, Dr. Bartlett says. AI only works in balance with a physician’s knowledge of existing literature, as well as consultation with their clinical colleagues.

Read more about the promise of integrating AI into medicine in our special report:

The Human Factor of Artificial Intelligence

"The physician has to judge how accurate the prediction is," she says. "No matter how fine-grained AI manages to get, we won’t be making decisions in a vacuum. For one person with cancer, a 15% chance of a drug working may be totally worth it, but for somebody else, considering side effects, the burden might not be worth the benefit."

As Cedars-Sinai develops its own AI applications, the organization’s AI Council of clinical, operational and research leaders helps ensure that the technology is built with strong, deliberate strategies and with bias mitigation in mind. Physicians are responsible for ensuring the AI algorithms they employ are accurate and appropriate, and they should engage in critical dialogue with developers, Dr. Bartlett says. The AI Council will facilitate and encourage such collaboration.

The skillful construction of AI tools and the way physicians leverage them to help answer their questions are the most critical variables in the technology’s success. AI’s utility to process enormous sets of information in search of patterns and trends depends on the careful attention and integrity of its human creators.

"AI’s intelligence is derived from human intelligence, and its design is based on the ways we make sense of the world," Dr. Bartlett says. "If we start to see problems in the AI programs, it’s a chance to reflect inward. It gives us two opportunities: change the AI, and change our practices that reflect it."